On-Device Machine Learning

Run machine learning models in your Android, iOS, and Web apps

Google offers a range of solutions to use on-device ML to unlock new experiences in your apps. To tackle common challenges, we provide easy-to-use turn-key APIs. For more custom use-cases, we help you train your model, integrate it in your app and deploy it in production.

This site helps guide you to the right Google solutions and tools that meet your needs.

The benefits of On-Device Machine Learning

Low Latency

Unlock new user experiences by processing text, audio and video in real-time

Keep data on-device

Perform inference locally without sending user data to the cloud

Works offline

No need for a network connection or running a service in the cloud

Turn-key solutions

To tackle common tasks with ML, we offer easy-to-use, production-ready APIs through the ML Kit SDK. These are built on high quality pre-trained models and are easy to integrate in Android and iOS apps.

Custom solutions and tools

We offer off-the-shelf pre-trained models that can be deployed on mobile and web apps. For more specific use cases, we offer tools for retraining existing models or train them from scratch.

Pick or train a model

-

TensorFlow Hub

Discover and evaluate pre-trained models

-

TensorFlow Lite Model Maker

Customize models using transfer learning

-

TensorFlow

Develop and train machine learning models

-

AutoML Edge

Train custom models in the cloud

-

TF Model Optimization Toolkit

Optimize your models for deployment

Integrate in your app

-

Android Studio

Import and use TensorFlow Lite models

-

TensorFlow Lite

Run inference on mobile and edge devices

-

TensorFlow.js

Run inference in the browser

-

MediaPipe

Cross-platform, customizable ML solutions for live and streaming media

Productionize and Deploy

-

Firebase Model Serving

Host and deploy custom models, model experimentation

Build your first on-device ML app

The learning pathways below provide a step-by-step guide to help you write your first on-device machine learning app.

Audio Classification

Write an app that can classify sounds in the environment around you. In this example, identify birds based on their song.

Image Classification

Build an app that takes a picture and gives you a list of labels that describe the image. Train a model to recognize newer labels and integrate it in your app.

Object Detection

Detect specific objects within an image and draw bounding boxes around them. Train a model to identify new objects and integrate the model in your app.

Text Classification for Mobile

Create an app that determines if your users are spamming your chatroom.

Text Classification for Web

Use Machine Learning in your web site to help filter comment spam.

Visual Product Search

Take a picture with your camera and search for matching products.

On-device ML in the real world

Here are some examples of how on-device machine learning is used by developers to tackle real world challenges.

BLOG

Making the world more accessible for people with vision impairments

Lookout by Google helps make the physical world more accessible, for users who are blind or low-vision. From helping users to quickly skim text, to capturing full documents, to identifying objects and packaged food, Lookout takes advantage of on-device ML models powered by TensorFlow Lite.

MLKIT

adidas uses on-device ML for augmented in-store shopping experience

Learn how adidas is using ML Kit’s Object Detection & Tracking API in their Android and iOS app to create an intuitive in-store visual search experience and make it easier for their customers to find and try-on their next set of adidas shoes. On-device ML makes it possible to seamlessly detect shoes in real-time and within seconds returns an image recognition match against hundreds of products.

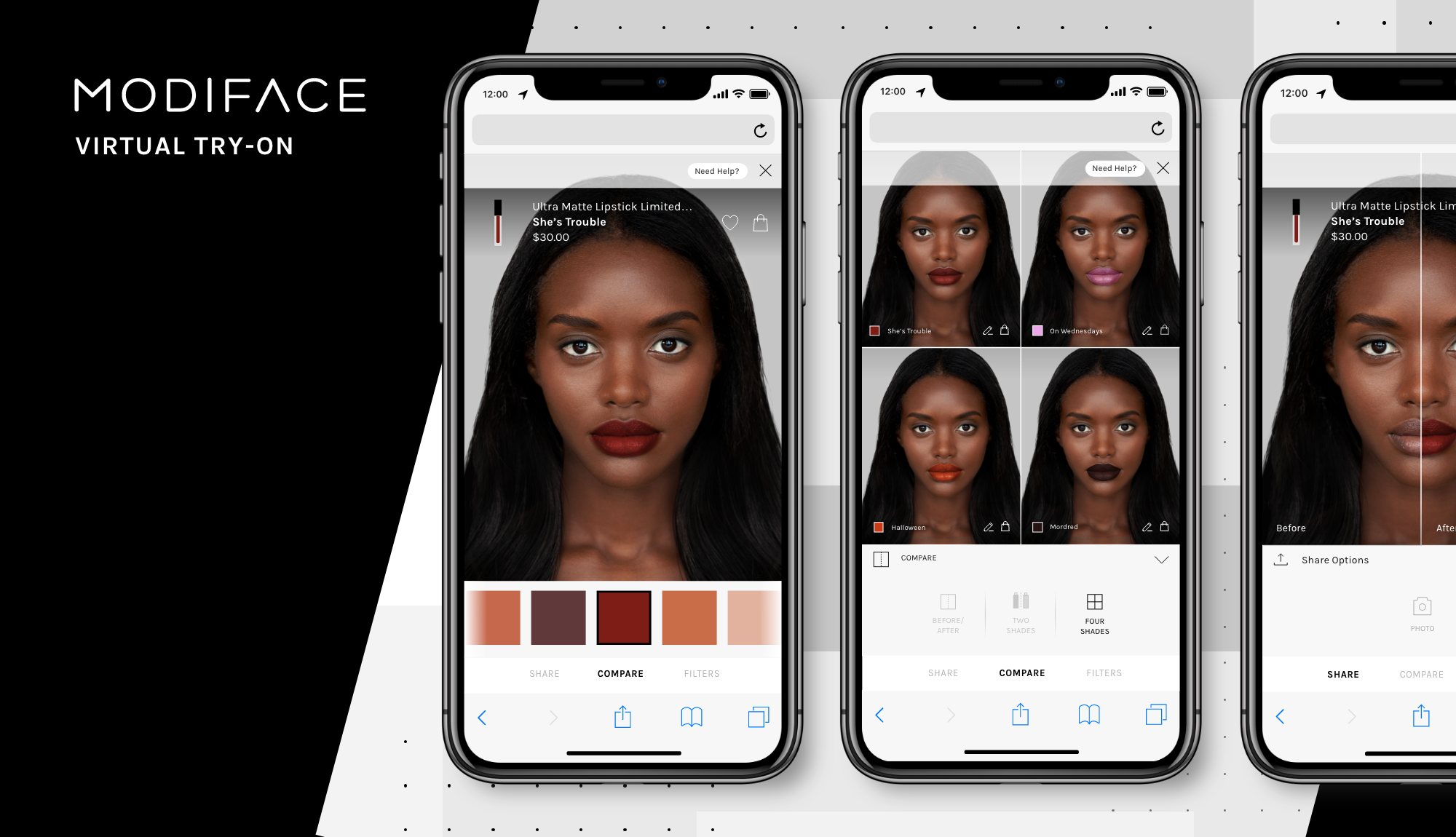

TensorFlow.js

Modiface uses TensorFlow.js for AR makeup try on in the browser

Modiface uses the TensorFlow.js face detection model to identify key facial features and combine them with WebGL shaders, allowing users to digitally try on makeup for L'Oreal brand products entirely in the browser, preserving user privacy.

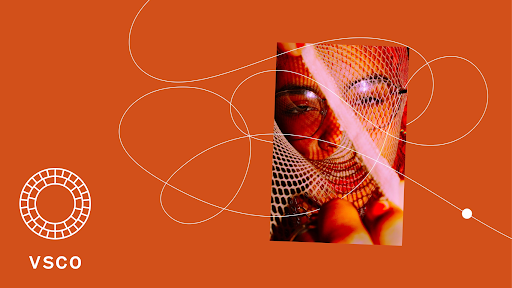

TENSORFLOW LITE

VSCO uses on-device ML to recommend image presets

With hundreds of VSCO photo presets to choose from, helping users find and try new presets was a challenge. But with on device ML, the VSCO app now understands uploaded images and suggests presets that best complement them via the "For this photo" feature.